Despite decades of research on sound assessment practices, misunderstandings and myths still abound. In particular, the summative purpose of assessment continues to be an aspect where opinions, philosophies, and outright falsehoods can take on a life of their own and hijack an otherwise thoughtful discourse about the most effective and efficient processes.

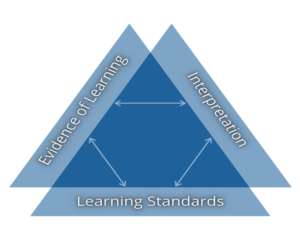

Assessment is merely the means of gathering of information about student learning (Black, 2013). We either use that evidence formatively through the prioritization of feedback and the identification of next steps in learning, or we use it summatively through the prioritization of verifying the degree to which the students have met the intended learning goals. Remember, it is the use of assessment evidence that distinguishes the formative form the summative.

The level of hyperbole that surrounds summative assessment, especially on social media, must stop. It’s not helpful, it’s often performative, and is even sometimes cynically motivated to simply attract followers, likes, and retweets. Outlined below are my responses to six of the most common myths about summative assessment. These aren’t the only myths, of course, but these are the six most common that seem to perpetuate and the six that we have to undercut if we are to have authentic, substantive, and meaningful conversations about summative assessment.

Myth 1: “Summative assessment has no place in our 21st century education system”

While the format and substance of assessments can evolve, the need to summarize the degree to which students have met the learning goals (independent of what those goals are) and report to others (e.g., parents) will always be a necessary of any education system in any century. Whether it’s content, skills, or 21st century competencies, the requirement to report will be ever-present.

However, it’s not just about being required; we should welcome the opportunity to report on student successes because it’s important that parents and even our larger community or the general public understand the impact we’re having on our students. If we started looking at the reporting process as a collective opportunity to demonstrate how effective we’ve been at fulfilling our mission then a different mindset altogether about summative assessment may emerge. It’s easy to become both insular and hyperbolic about summative assessment but using assessment evidence for the summative purpose is part of a balanced assessment system. Cynical caricatures of summative assessment detract from meaningful dialogue.

Myth 2: “Summative assessments are really just formative assessments we choose to count toward grade determination.”

Summative assessment often involves the repacking of standards for the purpose of reaching the full cognitive complexity of the learning. Summative assessment is not just the sum of the carefully selected parts; it’s the whole in its totality where the underpinnings are contextualized.

A collection of ingredients is not a meal. It’s a meal when all of those ingredients are thoughtfully combined. The ingredients are necessary to isolate in preparation; we need to know what ingredients are necessary and their quantity. But it’s not a meal until the ingredients are purposefully combined to make a whole.

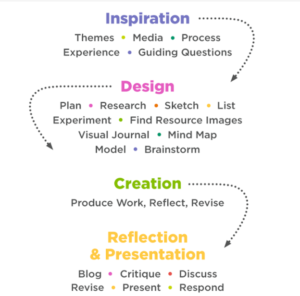

Unpacking standards to identify granular underpinnings is necessary to create a learning progression toward success. We unpack standards for teaching (formative assessment) but we repack standards for grading (summative assessment). Isolated skills are not the same thing as a synthesized demonstration of learning. Reaching the full cognitive complexity of the standards often involves the combination of skills in a more authentic application, so again, pull apart for instruction, but pull back together for grading.

Myth 3: “Summative assessment is a culminating test or project at the end of the learning.”

While it can be, summative assessment is really a moment in time where a teacher examines the preponderance of evidence to determine the degree to which the students have met the learning goals or standard; it need not be limited to an epic, high stakes event at the end. It can be a culminating test or project as those would provide more recent evidence, but since we know some students need longer to learn, there always needs to be a pathway to recovery in that these culminating events don’t become disproportionately pressure packed and one-shot deals.

Thinking of assessment as a verb often helps. We have, understandably, come to see assessment as a noun – and they often are – but it is crucial that teachers expand their understanding of assessment to know that all of the evidence examined along the way also matters; evidence is evidence. Examining all of the evidence to determine student proficiency along a few gradations of quality (i.e., a rubric) is not only a valid process, but is one that should be embraced.

Myth 4: “Give students a grade and the learning stops.”

This causal relationship has never been established in the research. While it is true that grades and scores can interfere with a student’s willingness to keep learning, that reaction is not automatic. The nuances of whether the feedback was directed to the learning or the learner matters. Avraham Kluger & Angelo DeNisi (1996) emphasized the importance of student responses to feedback as the litmus test for determining whether feedback was effective.

There are no perfect feedback strategies but there are more favorable responses. If we provide a formative score alongside feedback, and the students reengage with the learning and attempts to increase their proficiency then, as the expression goes, no harm, no foul. If they disengage from the learning then clearly there is an issue to be addressed. But again, despite the many forceful assertions made on social media and in other forums, that relationship is not causal.

Again, context and nuance matters, especially when it comes to the quality of feedback. Remember, when it comes to feedback, substance matters more than form. Tom Guskey (2019) submits that had the Ruth Butler (1988) study, the one so widely cited to support this assertion that grades stop learning, examined the impact of grades that were criterion-referenced and learning focused versus ego-based feedback toward the learner (as in you need to work a little harder) then the results of those studies may have been quite different.

The impact in those studies was disproportionate to lower achieving students so common sense would dictate that if you received a low score and were told something to the effect of, “You need to work harder” or “This is a poor effort” that a student would likely want to stop learning. But a low score alongside a “now let’s work on” or “here’s what’s next” comment could produce a different response.

Myth 5: “Grades are arbitrary, meaningless, and subjective.”

Grades will be as meaningful or as meaningless as the adults make them; their existence is not the issue. Grades will be meaningful when they are representative of a gradation of quality derived from clear criteria articulated in advance. What some call subjective is really professional judgment. Judging quality against the articulated learning goals and criteria is our expertise at work.

Pure objectivity is the real myth. Teachers decide what to assess, what not to assess, the question stems or prompts, the number of questions, the format, the length, etc. We use our expertise to decide what sampling of learning provides the clearest picture. It is an erroneous goal to think one can eliminate all teacher choice or judgment from the assessment process. During one of our recent #ATAssessment chats on Twitter, Ken O’Connor reminded participants that the late, great Grant Wiggins often said: (1) We shouldn’t use subjective pejoratively and (2) The issue isn’t subjective or objective; the issue is whether our professional judgments are credible and defensible.

Myth 6: “Students should determine their own grades; they know better than us.”

Students should definitely be brought inside the process of grade determination; even asked to participate and understand how evidence is synthesized. But the teacher is the final arbiter of student learning; that is our expertise at work. This claim might sound like student empowerment but it marginalizes teacher expertise. Are we really saying a student’s first experience is greater than a teacher’s total experience? Again, bring them inside the process, give them the full experience, but don’t diminish your expertise while doing so.

This does not have to be a zero-sum game; more student involvement need not lead to less teacher involvement. This is about expansion within the process to include students along every step of the way; however, our training, expertise, and experience matter in terms of accurately determining student proficiency. Students and parents are not the only users of assessment evidence. Many important decisions both in and out of school depend on the accuracy of what is reported about student learning which means teacher must remain disproportionately involved in the summative process.

Combating these myths is important because there continues to be an oversimplified narrative that vilifies summative assessment as all things evil when it comes to our assessment practices. That mindset, assertion, or narrative is not credible. Not to mention, it’s naïve and really does reveal a lack of understanding of how a balanced assessment system operates within a classroom.

The overall point here is that we need grounded, honest, and reasoned conversations about summative assessment that are anchored in the research, not some performative label or hollow assertion that we defend at all costs through clever turns of phrases and quibbles over semantics.

Black, P. (2013). Formative and summative aspects of assessment: Theoretical and research foundations

in the context of pedagogy. In J. H. McMillan (Ed.), SAGE handbook of research on classroom assessment (pp. 167–178). Thousand Oaks, CA: SAGE.

Butler, R. (1988). Enhancing and undermining intrinsic motivation: The effects of task- involving

and ego-involving evaluation on interest and performance. British Journal of Educational

Psychology,58(1), 1-14.

Guskey, T., 2019. Grades versus Feedback: What does the research really tell us?.

[Blog] Thomas R. Guskey & Associates, Available at:

Kluger, A., & DeNisi, A. (1996). The effects of feedback interventions on performance: A

historical review, a meta-analysis, and a preliminary feedback intervention theory. Psychological Bulletin, 119(2), 254–284.