This is a guest post written by Nina Pak Lui and Colin Madland

Assessment is at the heart of formal learning environments. Assessment practices in K-12 contexts have been the subject of significant research, especially since the late 20th century. However, the assessment practices and beliefs of higher education instructors have not been researched to nearly the same degree. This likely stems from the relative lack of preparation university instructors outside of Schools of Education receive in pedagogy and assessment (Massey, 2020). This has led to the current situation in which higher education has much to learn from K-12. In this post, we outline the problem that exists between modern assessment and pedagogical practices in higher education, and provide two ways assessment practices can shift in higher education.

Background

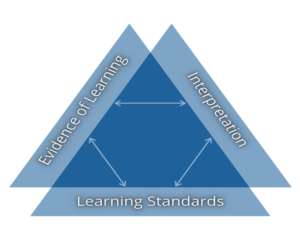

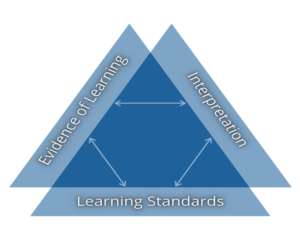

It is helpful to think about assessment in terms of a model. In Knowing what Students Know, Pellegrino, et al. (2001) provide the accessible model of the “assessment triangle”, a modified version of which is shown below. The assessment triangle comprises three interdependent elements: (1) a cognitive model of the domain, which can be understood for our purposes as learning standards; (2) an instrument or process used to gather evidence of proficiency, and; (3) an interpretation of the evidence of learning. High quality assessment practices require that each of these three elements are in alignment with each other. For example, if the learning standard specifies that learners will be able to critically analyze historical texts, and the instrument used to gather evidence asks learners to identify a correct answer, there is misalignment between those two elements and the interpretation will lack validity.

In the mid- to late-20th century, assessment practices and pedagogy in higher education were in quite close alignment. The prevailing theory of learning was that of behaviourism as popularized by BF Skinner who argued that learning is maximized when learners receive immediate, positive feedback when they supply the correct answer to a question. This led to pedagogical practices that prioritized breaking down concepts into smaller and smaller ideas and having learners memorize the correct answers. Accordingly, assessment practices prioritized instruments filled with selected-response items requiring examinees to recognize correct answers.

Over time, however, our understanding of the cognitive processes involved with learning have evolved. We now recognize that learning is a complex social process and that knowledge is constructed through social interactions. As such, the characteristics of pedagogy in K-12 and increasingly in higher education have shifted away from rote memorization and moved towards the 21st century goals of collaboration, critical thinking, analysis, creativity and life-long learning. Unfortunately, however, Shepard (2000) and Lipnevich et al. (2020) point out that assessment practices in higher ed remain stuck in the behaviourist views of the mid-20th century with a heavy emphasis on high-stakes selected-response tests.

For me, Nina, the stages of Chappuis & Stiggins’ Assessment Development Model (ADM) and key principles of Standards Based Learning (SBL) significantly shifted my assessment practices to reflect modern assessment theory and aims of 21st century learning. To illustrate, a “Then and Now” reflection below shows two ways assessment practices can shift in higher education:

1. From using predominantly selected-response methods, toward implementing performance-based and personal communication methods that are better aligned and reflective of course learning standards.

2. From students as passive participants in the assessment process, toward students as active users of assessment as a learning opportunity.

Then and Now

I used to stick to common types of assessment instruments used in higher education. Although learning can be experienced and demonstrated in multiple ways, I was hesitant to take pedagogical risks in the early years of teaching in higher education. Looking back, my lack of assessment literacy and my preconceived assumptions of what assessment practices should look and sound like in higher education were barriers to effective teaching and student learning. Although course learning standards were present in syllabi, I used to plan activities and assessment tasks before identifying priority standards and clarifying proficiency. Wiggins & McTighe (2011) call this the “Twin Sins” of traditional planning.

Then I learned that clearly knowing what is being assessed and choosing the optimal method depends foremost on the kinds of learning being assessed (Chappuis & Stiggins, 2019). In the planning and development stages of ADM and SBL, priority course learning standards are identified, and the underpinning learning targets are clarified with and for students (Chappuis & Stiggins, 2019; Schimmer et al., 2018). Unpacking learning standards and clarifying proficiency allows instructors to thoughtfully consider how they will summatively and formatively assess student learning (Schimmer et al., 2018; White, 2017). This process helps me select appropriate assessment methods and design assessment instruments aligned with proficiency of the learning standards. Before any assessment instruments are used, I take into consideration potential bias and barriers, and critique the overall assessment for quality (Chappuis & Stiggins, 2109). As a result of intentional planning and sound development, I am able to gather information – evidence of learning – to make formative and summative decisions based on interpretations of student learning with greater validity. Chappuis & Stiggins (2019) suggest that if there is no accuracy, there is no way to know if there has been a gain in knowledge, ability, or understanding.

Now I realize that course learning standards are cognitively complex. Students critically analyze, synthesize, make judgments, gain empathy and self-knowledge, transfer, co-create, and apply course learning in meaningful and transformative ways. These aims reflect 21st century learning goals of higher education. Wiggins & McTighe (2011) point out that knowing facts in order to recall them is superficial learning that can be quickly forgotten, whereas the ability to connect facts and create meaning is deeper learning or enduring understanding. In my current practice, assessment methods and instruments are designed for students to demonstrate higher-order thinking and meaning-making (Pak Lui & Skelding, 2021). Students continue to demonstrate their reasoning and creative abilities through written expression. They also engage in free inquiry which gives them the opportunity to choose their own questions related to the course that are of deep personal interest to them. Students communicate their learning through performance-based and personal communication assessment methods. In a free inquiry, instead of prescribing what the authentic piece should be, students choose the creative mediums and share their learning publicly (MacKenzie, 2016).

Here are a couple of examples from my practice, as recounted in a recent book chapter (Pak Lui and Skelding, 2021):

A former student investigated how to destigmatize mental health in education and had the bravery to include their own mental health journey in their authentic piece. They shared a raw and honest four-stanza poem and accompanied it with related and provoking images in the form of a photo essay. There was not a dry eye in the classroom; the community of learners were drawn into their peer’s learning at an intellectual and emotional level. Another example is of a student who inquired about the standardization of assessment in education. They too combined their research findings and unpacked their own educational experiences with high-stakes assessment by writing and performing a musical rap. The lyrics, rhythm and physical expression of the rap illustrated their key inferences and implications of the urgent need for assessment reform in education.

What I noticed as a result of using assessment methods that are a good match for assessing cognitively complex learning standards (such as written response, performance assessment, or personal communication) was an increased ability as an instructor to interpret evidence of learning. I have greater confidence that the inferences I make accurately reflect achievement of intended learning. Additionally, increasing the value of and use of formative assessment practices shifted students from being passive participants in the learning process to students being active users of assessment results as a learning opportunity. Students regularly receive feedback, and they are given time to act on feedback. Moreover, their involvement as self-assessors of their own learning leads to greater awareness of strengths and areas for improvement and growth before evaluation. As my own assessment practices shift and evolve, I notice teaching and learning becoming a genuine partnership. Students and I are able to develop relational trust, and we are more confident in taking risks in pedagogy together (Pak Lui & Skelding, 2021). It is my hope for students to see that my assessment practices have clear purpose, align to course learning standards, and provide necessary support to move their learning forward. According to White (2019), “without continuous formative assessment built into the classroom, creativity would suffer, risk-taking would lack purpose, and products students create would be meaningless” (p. 33).

Concluding Thoughts

COVID-19 provides an opportunity for many university instructors to re-examine both pedagogy and assessment practices in higher education. As we look forward to establishing a new normal, research-based shifts in assessment practices can be a way for 21st century learners to experience a high quality education.

Nina Pak Lui is an Assistant Professor of Education at Trinity Western University in Langley, British Columbia. She studies and teaches curriculum design and assessment for learning. In 2020, she won the Provost Teaching and Innovation Award. You can find her on Twitter @npaklui.

Colin Madland is a PhD candidate in Educational Technology at the University of Victoria in British Columbia where he is studying approaches to assessment in higher education. You can find him at https://cmad.land and on Twitter @colinmadland.

Chappuis, J. & Stiggins, R. (2019). Classroom assessment for student learning: Doing it right – Using it well (3rd ed.). Pearson Education.

Lipnevich, A. A., Guskey, T. R., Murano, D. M., & Smith, J. K. (2020). What do grades mean? Variation in grading criteria in American college and university courses. Assessment in Education: Principles, Policy & Practice, 27(5), 480–500. https://doi.org/10/ghjw3k

Massey, K. D., DeLuca, C., & LaPointe-McEwan, D. (2020). Assessment literacy in college teaching: Empirical evidence on the role and effectiveness of a faculty training course. To Improve the Academy, 39(1). https://doi.org/10/gj5ngz

MacKenzie, T. (2016). Dive into inquiry: Amplify learning and empower student voice.

EdTechTeam Press.

McTighe, J. and Wiggins, G. (2011). The understanding by design guide to creating high-quality units. Association for Supervision and Curriculum Development.

Pak Lui, N. & Skelding, J. (2021). An emergent course design framework for imaginative pedagogy and assessment in higher education. In Cummings, J. & Fayed, I. (Eds.), Teaching in the post COVID-19 era. [In Print Stage]. Springer Publishing.

Pellegrino, J. W., Chudowsky, N., & Glaser, R. (2001). Knowing what students know: The science and design of educational assessment. National Academies Press. https://doi.org/10.17226/10019

Schimmer, T., Hillman, T., and Stalets, M. (2018). Standards based learning in action: Moving from theory to practice. Solution Tree Press.

Shepard, L. A. (2000). The role of assessment in a learning culture. Educational Researcher, 29(7), 4–14. https://doi.org/10/cw9jwc

White, K. (2017). Softening the edges: Assessment practices that honor K to 12

teachers and learners. Solution Tree Press.

White, K. (2019). Unlocked: Assessment as the key to everyday creativity in the

classroom. Solution Tree Press.