Education is a noble profession. It is a profession that aims to cultivate diverse thinkers and aspires to nurture personal growth. It is a profession that can lift humanity’s spirits and help humankind strive to be the best version of itself—the “great equalizer of the conditions of men,” as Horace Mann famously stated in the 19th century. However, even with great people, a worthy goal, and an admirable vision, the opposite can often be the case.

Unfortunately, education can also be the great unequalizer, where personal biases can inform practice and policy development, stifle student growth, enforce discriminatory policies, and even socially isolate students. According to some researchers, implicit educator biases may contribute to a racial achievement gap; precisely, the negative impact of teacher assumptions on students’ ability based on race, culture, or values (van den Bergh et al. 2010). Unknown to an educator, these personal biases may create imagery of an ideal student, which is often seen through a white privilege lens because of society’s tendency toward whiteness, distorting our interactions with students of color.

Without social awareness and continuous self-monitoring, educators may let their implicit bias become an influential factor in their pedagogy, influencing everything from assessment to grading. This blog will discuss how personal biases can appear in our teaching and learning practices if educators are not diligent. I will focus on three of the more considerable teaching and learning modes: assessment, feedback, and grading.

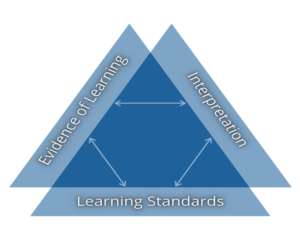

Assessment Bias

Without attention, teachers may create assessments that reflect their values and experiences and ignore those of their students. The teacher may use language that they are more familiar with in their queries or create prompts influenced by their personal experiences. Ultimately students may find it hard to relate to the questions—potentially leaving some students unable to perform to the best of their abilities. For assessments to be less biased, a teacher must consider all backgrounds, ethnicities, genders, and identities to ensure that their lived experience isn’t the only one represented on an assessment.

By being more introspective when developing assessments, teachers can lessen the chance they produce a personally irrelevant assessment for their students. The impact of this irrelevancy could be low student performance, disinterest in the task, and even apathy toward school.

To help teachers be more aware of their biases when creating assessments, they can ask themselves the following questions as they develop questions:

- Does the assessment give the sense that the teacher has unwavering support and is a partner in a student’s success?

- Are the questions seeking to understand the student or judge them?

- Does the teacher draw on the students’ life situations, interests, and curiosities when creating problems/prompts? – Adapted from Tomlinson (2015).

Microaggressions in Feedback

Suppose we ignore our implicit biases when speaking with students. In that case, we run the risk of putting both parties into what social psychologist Albert Bandura calls “a downward course of mutual discouragement” (Bandura 1997, 234). A student’s reaction to deficit-based feedback may result in the teacher reacting in kind. Once this cycle starts, a student’s self-belief is now at risk.

Microaggressions and subtle discriminations can exist in the feedback process, and when they do, they may severely limit feedback acceptance.

Teachers can limit bias in their feedback to use the student’s thinking to grow the student. For example, let’s look at the following examples:

Example A

Feedback that Uses Teacher Thinking: You didn’t include [these details] about [person] in your essay. Try [these words].

Feedback that Uses Student Thinking: Tell me more about these words [here]. I am interested to know why you think [this word] didn’t work instead? Oh, okay, that would work. You should add what you just said to your paragraph, and it was perfect.

Example B

Feedback that Uses Teacher Thinking: When I write, I try and think about [detail]. Remember when I taught you the three-step process? No? The one that is in your textbook? That’s the most effective process.

Feedback that Uses Student Thinking: What did you think about when you wrote [this]? Seems like that interests you? Yeah, I can see you are passionate about [that]. What are the first three things you did when you wrote [this]? That is an interesting place to start. Could I convince you to start here? No? Okay, that makes sense. Have to make this work for you.

Example C

Feedback that Uses Teacher Thinking: In this graph, I would start [here] because this information is important. Has anyone heard of the [rhyme name] to remember the key features of a graph? No? Oh, this helped me a lot.

Feedback that Uses Student Thinking: In this graph, what information did you think was essential for you to begin this problem? I’m surprised to hear you say that because yesterday you said something different, what changed? Interesting, I saw you smile as you were talking. Why? Yeah, I agree you are getting this concept more. Are you using any strategies to help you learn this? Yes. Well, [that strategy] is undoubtedly helping you.

These scenarios are fictional, but the point here is teachers should always be aware of their language. Otherwise, they can inadvertently make the student feel like an unequal and devalued student in the class and even the school. In short, words matter.

Race Bias in Grading Practices

Teachers must judge student performance fairly and accurately. It is our professional duty. Inaccurate judgments have the potential not only to alter grades but could negatively affect teacher-student relationships, distort a student’s self-concept, or reduce opportunities to learn (Cohen and Steele 2002). One factor that can lead to a misrepresentation of a grade is teacher race and ethnicity bias. A student’s racial or ethnic group, socioeconomic class, or gender can substantially bias a teacher’s judgment of student performance. Any internalized racial biases can activate stereotypes and lead teachers to utilize discriminatory performance evaluations (Wood and Graham 2010).

For example, several studies found substantial differences in students’ performance judgments from various racial subgroups when the teacher subconsciously subscribed to the general stereotype that African American and Latino students generally don’t perform as well as their White and Asian counterparts (Ready and Wright 2011).

Different minority statuses can affect teacher perceptions in performance evaluation, leading to inaccurate grades, potentially harming students’ perception of their academic experience. (Ogbu and Simons 1998). In other words, students may feel like school is insignificant, unsupportive, or even harmful.

To help lessen the likelihood that implicit personal bias influences the grading process, teachers can democratize the grading process. They can use learning evidence instead of points and employ a modal interpretation of gradebook scores instead of averages. They can use a skills-focused curriculum instead of a content-focused one. Perhaps most important, they can involve the student in the grading process by infusing more self-evaluation moments into their instruction.

School leaders should explore ways to evaluate pedagogical practices through a racial and equity lens and observe classroom interactions between teachers and students. School leaders should also continue training on white privilege and its influence over the status quo, and teachers should evaluate student performance to judge its fairness and accuracy.

The Work Ahead

Although we may feel like we are objective and rational people, we all have biases. We all have values, beliefs, and assumptions that help us make sense of what is happening in our lives and guide our interactions with others. For the most part, these values, beliefs, and assumptions guide us in positive and productive directions, but the interplay between these same values and social interactions can produce implicit biases that distort our decisions, perspectives, and actions. We must notice, monitor, and manage these distortions to achieve a goal of racial equity in school and life. If we don’t, we are at risk of our unconscious biases harming our pedagogy, our relationships with students, and our perception of their needs.

Bandura, A. (1997). Self-efficacy: The exercise of control. New York, NY: W. H. Freeman & Co, Publishers.

Cohen, G. L., & Steele, C. M. (2002). A barrier of mistrust: How negative stereotypes affect cross-race mentoring. In J. Aronson (Ed.), Improving academic achievement: Impact of psychological factors on education (pp. 303–327). San Diego, CA: Academic.

Ogbu, J. U., & Simons, H. D. (1998). Voluntary and involuntary minorities: A cultural-ecological theory of school performance with some implications for education. Anthropology and Education Quarterly, 29(2), 155-188.

Ready, D.D., & Wright, D. L. (2011). Accuracy and Inaccuracy in Teachers’ Perceptions of Young Children’s Cognitive Abilities: The Role of Child Background and Classroom Context. https://doi.org/10.3102/0002831210374874

Tenenbaum, H. R., & Ruck, M. D. (2007). Are teachers’ expectations different for racial minorities than for European American students? A meta-analysis. Journal of Educational Psychology, 99(2), 253–273.

“Teaching Up” – Carol Ann Tomlinson: Teaching for Excellence in Academically Diverse Classrooms (2015).

van den Bergh, L., Denessen, E., Hornstra, L., Voeten, M., & Holland, R.W., (2010). The implicit prejudiced attitudes of teachers: Relations to teacher expectations and the ethnic achievement gap. American Educational Research Journal, 47, 497–527.

Wood, D., & Graham, S. (2010). “Why race matters: social context and achievement motivation in African American youth.” In Urdan, T. and Karabenick, S. (Eds.) The Decade Ahead: Applications and Contexts of Motivation and Achievement (Advances in Motivation and Achievement, Vol. 16 Part B) (pp. 175-209). Emerald Group Publishing Limited, Bingley.

1

1